Beginner’s understanding towards Convolutional Neural Network (CNN)

I have just started learning Deep Learning and I hope by writing it out, I can have a deeper understanding with the topic (you see what I did there). Be sure to point out my mistakes!

For this, I am not going to dive deep into the math behind it, such as the matrix multiplication and summation, but you’ll get the rough idea after reading this. Plus, there are many tutorials out there that can do better than what I have here.

Let’s get started.

Say, for an image:

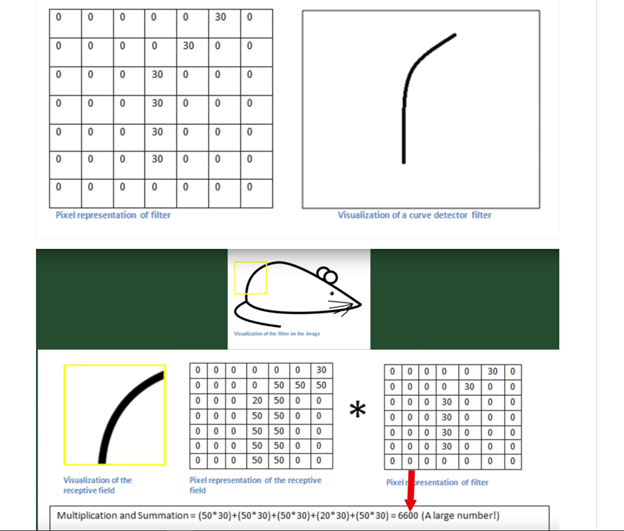

We want our model to recognize that it is a mouse. So, the first thing that we have to know is that an image is a matrix, think of it that way: An image has a row and a column matrix and 3 matrices behind it that signify the color channels, hence an image is just a 3-dimensional matrix. Unless it is a black and white image, it will have only 2 channels. Now we want our model to recognize it is a mouse. But taking from how we humans see things, we use the Mammalian Visual Cortex. How that works basically is that the moment we see an object, we generate many different layers of features in our brain and we activate different neurons for this action. We call them features, filters, weight matrix or kernels interchangeably in Machine Learning. And from the first layer all the way to the last layer, we learn the shape of the object, then the circles, the triangles, then more abstract shape that describes the object and it goes on until the last layer where we are able to visualize the whole object and identify that it is indeed a mouse. The layers here, are actually the weight matrix that we will need in our CNN.

Now, to build a CNN, we take a small window size on our image of mouse, also known as a receptive field and we apply dot product using the many different weight matrices that we have on the receptive field. Note that we have to slide the receptive field over the whole image to generate an output. The basic idea here is that we are applying a set of feature matrices to the original image to find the patterns in it. In the end, it will return a set of matrices that has a set of values that correspond to the weight matrix. This is also called the activation maps (or feature maps) where the locations in the activation maps signified that the feature is detected in the input image. Note that there are many features that we can initiate for the model to learn. For example, we have a curve line as a feature matrix and if we multiply this matrix with the part of the image that has a curve line as well, it will generate a non-trivial number! Conversely, if the curve line does not exist in the original image, then the output will be 0! This is called convolution as we take two sets of matrices and combine them to form another set of output.

Then, we perform an action called ReLu function (Rectified Linear Unit), also called an activation function. Note that in the first step we want to only have the relevant output for the points in the matrices that share the same pattern or shape as the original image. Hence normalization, or activation, takes the negative value of the output matrix and turn it into 0, making it irrelevant so that the relevant part can be computed easily later. It is just that simple: turning negative values into 0 and anything positive we squash it into a range of values between 0 and 1.

Now, we perform what’s called pooling. This is done after we have multiplied all the weight matrix with the input matrices which will result in a very large matrix. What pooling does is that it extracts the most important part of the matrix and pass it on to the next layer. Note that we repeat these steps for several times and by doing so more feature maps are created at every layer. So, after we have the set of matrices that correspond to the set of weight matrices, we choose a window size of 2x2. Then, we place the window on top of each output matrices that we found earlier. In each window size that we are going to slide over the whole image, we take the maximum number in each of that window. What this does is just reducing the dimension of the matrices that we found. Note that the patterns of the features still stay mostly the same, it is just a shrunken version of the original image. For instance, for the part of the image that has a straight line, this reduced matrix will output a matrix of numbers that correspond to a straight line, where the middle column of the matrix has higher values than the rest of the locations in the matrix.

Finally, we condense the matrices and we have a very tall stack of matrices with all the output values that we want. At the very end of this process, we will need to apply a SoftMax function and what this does is that it converts the outputs to probability values so that it can classify the image based on the probability values. We classify images by taking the maximum value of the probability scores.

Well, the first time we pass these feature matrices, it is obviously not going to work and the way we train the model is by using backpropagation. I will not explain too much on backpropagation as there are tons of tutorials on this. Basically, it is just a method of gradient descent that works by using the Chain rule of derivatives across the layers in the network. At the end of training, what you will get is a fully functional Convolutional Neural Network that can classify images based on probability values.

A good time to use this neural network is when we want to classify images or data (2D or 3D). We can even generate images using this network but we will get to this in another article. For now, CNN works best when applied to image classification.

There you go, I hope this article can give you a brief idea on how it works, but to get an in-depth knowledge, there are many tutorials out there that are worth watching. Thank you.

For the most part, I referred to Siraj Raval’s video here.